AIRSound

In December 2019, I took part in another hackathon by Leonardo S.P.A., after the Innovathon one in November.

This time the hackathon was not limited to the conventional 2-3 days, but each team had one month to develop an application to address one of the

three proposed challenges; there were almost no constrains on the tools and technologies that could be employed.

Each team was composed of five members, a tutor from the Italian Air Force, and a mentor from the company.

We developed a cross-platform application to address the second challenge, the tap testing one, using a KNN classifier to classify the sounds and kivy, a python-based library to build the Graphical User Interface of the app.

My Roles

Designed and implemented the GUI of the application.

Integrated the ML algorithms into the GUI.

Challenge

The three topics were:

- Create an Augmented Reality assistant to help aircrafts maintainers during their routine and extraordinary maintenance procedures. To repair or substitute a component in an aircraft, there are many procedures that must be carried out in order; some of them are for the safety of the maintainer, others to ensure a correct installation of the new piece. An AR assistant can help the maintainer by giving them precise directions directly in the AR visor.

- Create an algorithm to help aircrafts maintainers to perform the tapping test; the tapping test is a technique that consists in tapping on a mechanical component of an airplane and listening to the produced sound. An expert maintainer can properly understand if a piece is damaged, thus not suitable for flying by listening to this sound.

- Create an assistant to help aircrafts maintainers find and classify damages/issues on aircrafts' components and report them. While some damages are clearly visible, other are more difficult to find, especially on bigger components that have a larger surface to analyze. Besides, the operator need to properly classify the kind of damage and to fill a precise report, before asking for repairs.

All the three challenges were interesting, however, we decided to focus on the second one; create an algorithm for the tapping test.

Additional Context

As mentioned before, the tapping test is a technique to quickly verify if a component is faulty. The test itself is not extremely precise,

sometimes it is necessary to perform more advanced tests. Howerver, these tests are longer to execute and are more expensive.

On the other hand, the tapping test requires just some seconds to be executed (the actual time depends on the dimension of the surface to test).

An app, validated by expert operators, can help juniors and increase their performance. Besides, using this app also a non-expert can perform such a test;

indeed I performed a live demo of the tapping test in from of the jury using a rotor blade from an AW139 (a model of helicopter produced by Augusta Westland).

The tapping test need to be performed often on vehicles, and not always it is possible to perform it in a silent area; sometimes it is executed on the field or in very noisy

hangars. The app, that acquires the sounds from the device microphone, can help also in this scenarios by filtering out external noise.

Finally, it can be integrated with the existing systems, to report faulty component direclty from the app and to schedule routine maintenances for the vehicles.

Approach

Our team was the only one composed of participants that enrolled alone, so we had to work for the whole month remotely.

For this reason, we set up daily calls using the Cisco Meeting App offered by Leonardo S.P.A.

From the beginning we divided the workload into subtasks using a trello alternative and a slack channel.

Eugenio and me ideated, designed, and implemented the Graphical User Interface of the application.

Bruno and Alessandro developed the Machine Learning Algorithm (KNN Classifier).

Fabio studied the online platform provided by Oracle to improve the efficiency and the training speed of the ML algorithms.

At the beginning, I sketched out an interactive prototype of the interface using Axure RP; using the double diamond design technique, we iterated together the initial prototype by first adding features and redefining elements until we finally reached a consesus and decided a definitive design.

Additional Challenges

To help maintainers and operators efficiently, virtual assistant applications must be easy to use and intuitive; in particular, their interface should not be cluttered by unnecessary elements,

and the essential ones need to be clearly visible and easy to find. In addition their function must be clear, to avoid slips and mistakes.

For these reasons, we decided to reduce the elements present in the initial prototype to the bare minimum.

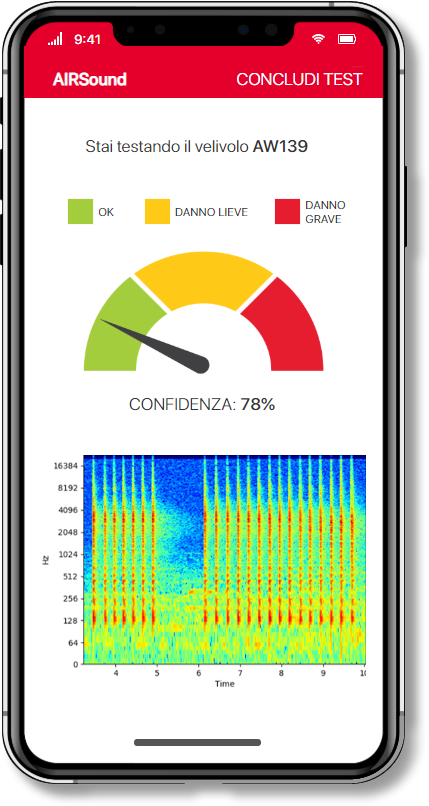

For example, initially the prototype had a real-time graph of the sound spectrogram; however, just by looking at the spectrogram it is difficult to actually

understand if a component is faulty or not. Thus, we decided to remove it from the test screen, and include it only in the final report, for additional checks.

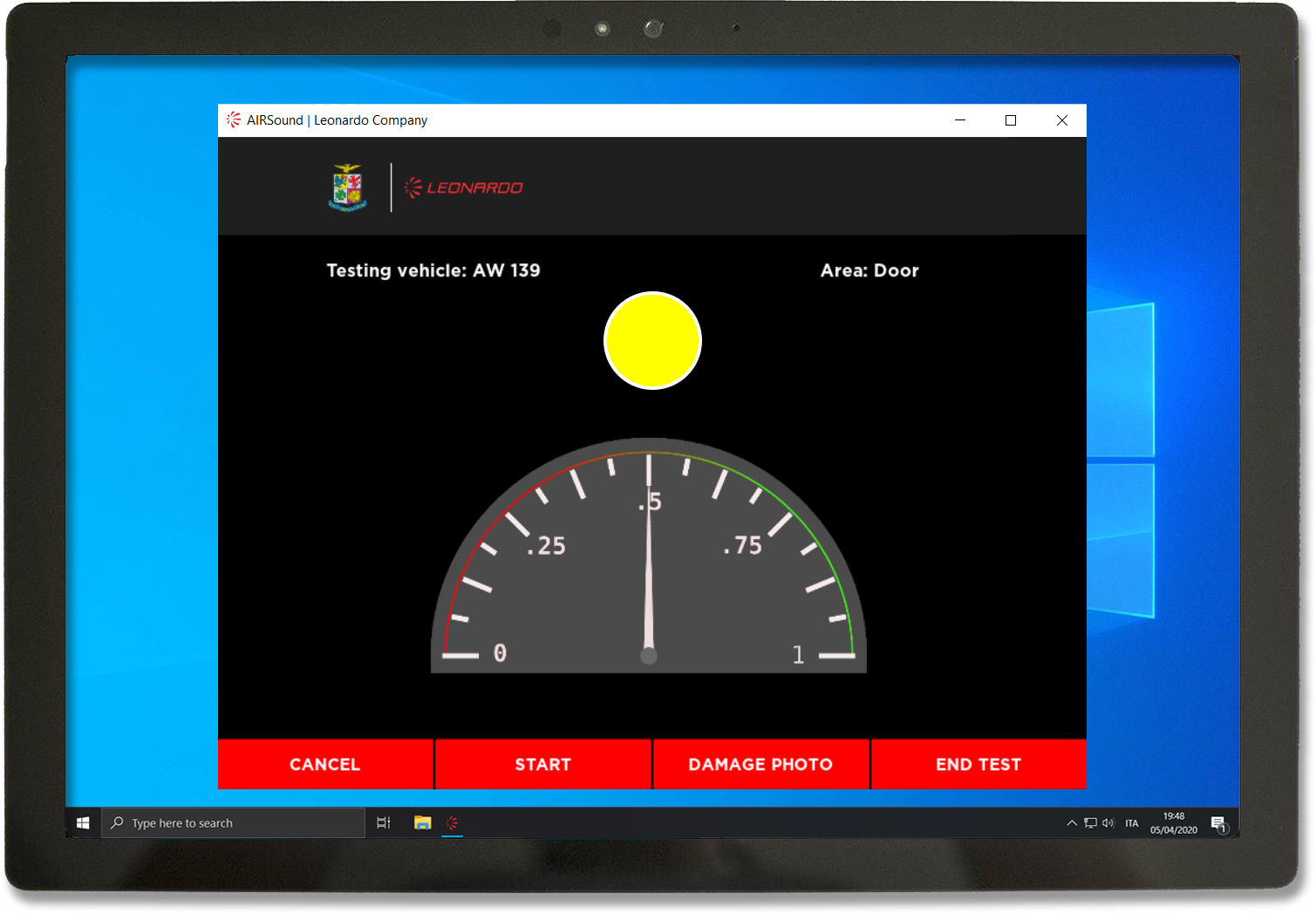

Also, we switched from a mobile version of the prototype to a tablet version; tablets, being bigger, are easier to operate especially with gloves, and can be positioned

just next to the operators, so that they do not need to hold the device anymore.

Finally, we also wanted the app to run as smoothly as possible; since it runs in the background Machine Learning algorithms, and has to update in real-time the user interface,

a tablet, like the Microsoft Surface 4 Pro that we used to present, guaranteed us enough perfomance.

Below from left to right, are the initial mockup and the improved one, both designed for smartphones.

Below the final version presented at the hackathon finals, showcased on a Microsoft Surface Pro 4.

Taking into account the main and the additional challenges described before, we developed this interface that comprises:

- Brief recap of the vehicle and the component being tested.

- Semaphore: Synthetic test status indicator (yellow = waiting for input, red = faulty component, green = component OK).

- Gauge: Detailed test indicator (indicates how good or faulty a component is).

- Cancel button: goes back to the previous screen and cancel the test.

- Start button: starts the test (activates the ML algorithms in background).

- Damage photo: for each faulty component the operator has to take a picture of it that will be included in the report.

- End test button: terminates the test once all the component were tested.

We removed the confidence indicator because it was difficult to read from the distance and did not add much value to the test screen itself.

Buttons are bigger than the ones in the mock-up, and easy to reach if holding the tablet with only one hand.

Results

After one month of hard work, our team finally met in Florence (Italy) for the last two days of the hackathon. There, we were able to complete and refine the application and got it ready for the final presentations. In the end, we won the second prize and an acknowledgement for the best teamwork.

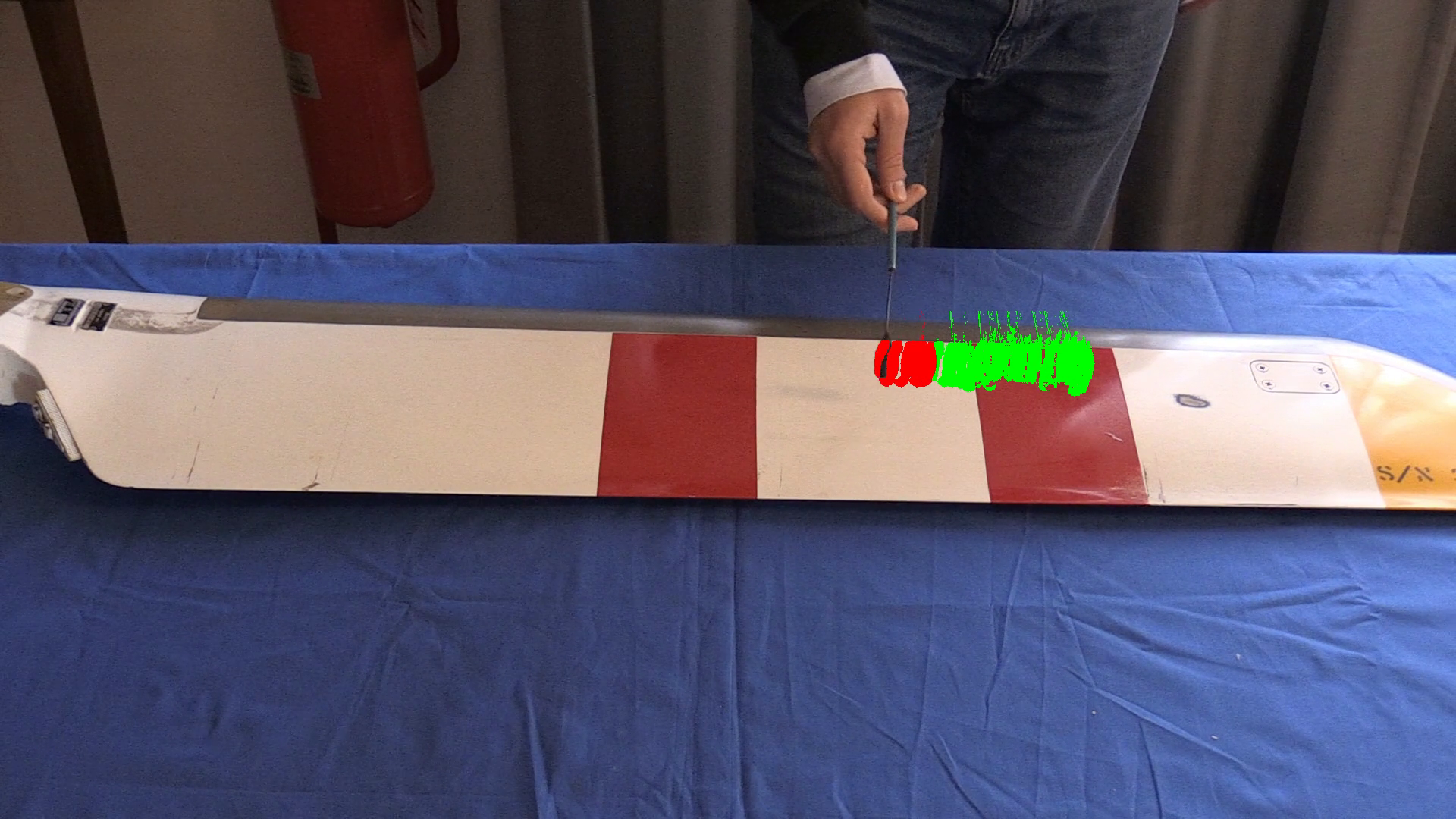

The finalized app do not need an internet connection since all the algorithms are executed directly on the device (edge computing).

Howerver, if an internet connection is available and the video of the test can be captured, it is possible to leverage the power of the cloud computing

and perform more advanced analysis. In particular, we developed an algorithm that analyzes the video, recognize the position of the tapping hammer, and

colors it in red if the component is faulty in that exact point, green otherwise.

The Figure below shows the advanced analysis results obtained with this algorithm.

Team Avanti

Our team, from left to right: Major De Angelis Roberto, Eugenio, Bruno, Fabio, Alessandro, me, and Daniele.